Last update: 07-03-2025

In Acrelia you can create experiments to help you get better results in your email campaigns. In this article, we will explain everything you need to know to get the most out of this functionality.

1. What is an A/B test?

2. What should you take into account before running an A/B test?

2.1 Determine the variable you are going to use

2.2 Define the sample size

2.3 Consider the waiting time

3. Types of A/B tests in Acrelia

3.1 A/B test by message subject

3.2 A/B test by sender

3.3 A/B test by content

3.4 A/B test by sending date

4. How to set up and send an A/B test in Acrelia

4.1 Setting up and sending an A/B test by message subject

4.2 Configure and send an A/B test by sender

4.3 Configure and send an A/B test by content

4.4 Configure and send an A/B test by sending date

5. Managing experiments

5.1 Pausing/deleting A/B tests

5.2. Choosing the winner

5.3 Statistics of the sendings

6. Operation of the A/B test

6.1 Decisions

6.2 Web version

6.3 Campaigns with dynamic content

In email marketing an A/B test consists of creating two combinations of a campaign to obtain data, compare them and identify the combination that has obtained the best results once the campaign has been sent.

The two combinations are made by changing one of the elements of the email campaign (message subject, content, sending date, sender...) and sent to a sample of two different groups randomly generated containing the same number of contacts. Once the winning variation has been identified, this campaign is sent to the rest of the subscribers.

Before launching the campaigns of your experiment, you must be clear about certain fundamental elements to create the A/B test and for it to be effective.

To obtain an accurate conclusion in this type of test, you must change only one element. If you add more than one variable, it is very difficult to determine which variable made the difference.

Examples of variables you can experiment with:

In order for the data from an email marketing A/B test to be meaningful, it is necessary to have a considerable number of contacts for each variant. It is recommended that the number should not be less than 5,000 contacts.

In addition, when the contact list has less than 1,000 subscribers, it is advisable to adhere to the 80/20 rule, also known as the Pareto principle. It consists of sending one variable to 10% of the contacts, the other variable to another 10% and the one that has obtained the best results to the remaining 80% of your contacts. Read more about the Pareto Principle.

In Acrelia, in order to create an A/B test you have to select a list or segment that includes 3 contacts.

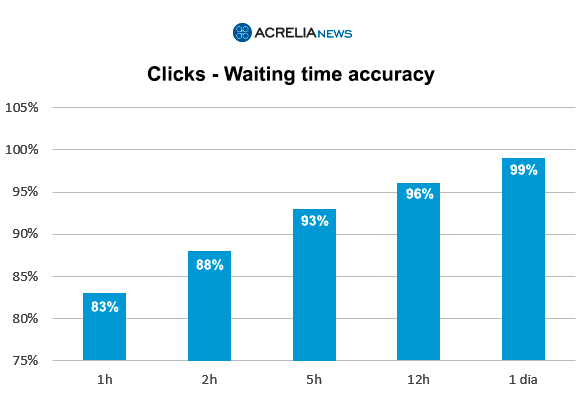

Allowing enough time for the tests to run will help you to have a better chance of picking the right winner. We recommend waiting at least 2 hours to determine a winner based on openings and 1 hour to determine a winner based on clicks.

For openings, it is estimated that if you wait 2 hours, there is an 80% accuracy rate. If the waiting time is 12 hours, it is 90% and if it is 24 hours, this percentage rises to 99%.

In terms of clicks, it is estimated that if you wait 1 hour, there is an accuracy rate of 83%, waiting for 5 hours, this percentage rises to 95% and if you wait 1 day, the accuracy rate is almost 100%.

Keep in mind that each contact list is unique, so these values may vary from case to case. Therefore, you can run several A/B tests and experiment with different metrics and durations to help determine which one produces the best results in your particular case.

In Acrelia there are 4 types of tests depending on the variable you want to experiment with.

It consists of using two subjects with some different elements (for example, using emojis or not, the lenght of it, personalising one of the options...). The experiment will allow you to know which of the two combinations has obtained better results. You can also test with two pre-header versions.

This consists of using two different senders (e.g. company name vs. sender's name or company name vs. department name plus company name). You can also "play" with the email address you will use as the sender.

It consists of designing two campaigns with different design elements (images, calls to action, structure...).

This consists of sending two identical campaigns in different time slots or on a different date.

To run any of our experiments you must access from the main menu to the "A/B Test" section and click on "Create new experiment".

The first thing you will have to fill in is the name you want to give to the test (section "Name of the experiment"), then select the list or segment you want to do it with (section "Target audience") and finally select one of the four types of A/B Test we have available (section "Type of experiment").

On the next screen you must determine the sample division, meaning the percentage of contacts to whom each of the versions will be sent and the percentage of contacts to whom the winning version will be sent. To do this, just move the selector on the horizontal scroll bar (in this section, remember the advice we gave you in section 2.2 of this article).

In this step you must also decide whether you want to choose the winner yourself (manually) and launch the final campaign when you consider it appropriate, or whether you want the system to calculate it (automatically) according to the variable you indicate (openings or clicks). In this second case, you will have to decide the time you want to wait from the end of the initial sendings until the system calculates the winner (a minimum of 2 hours in case of openings, minimum of 1 hour in case of clicks) and inform the time slot during which you want the winning sending to start (safe sending slot to avoid the campaign being sent at times that are not interesting for your audience, for example, at night).

*In the case of the A/B test by content, this configuration does not have to be done as the sending is done to the whole database (50% A - 50% B).

To create your A/B test by subject, as you have seen, you must first access the "A/B Test" option in the main menu and click on "Create new experiment". In the initial screen, you will have to write a name for the experiment (this is something internal that your subscribers will not see), select the list or segment and choose the "Subject" option in the "Type of experiment" section.

On the next screen, it will be time to define the sample with the scroll bar and configure the choice of the winner (whether it is manual or automatic). You can see the details in the introduction of this section.

A/B test configuration

Next, select the campaign you want to send by clicking on the image "Select campaign". A drop-down list with all the campaigns you have created will appear. Once located, click on "Select".

In the configuration wheel, you will be able to edit the sender you want to use, the language of the unsubscribe and update forms as well as the response email.

Once you have your campaign set up, you will need to define the two subjects and the two pre-headers.

Click "Next" to continue creating and sending the experiment.

Sending date and time

In the next step you simply need to select whether you want to send the campaigns now or schedule them for later. Once this has been defined, click on "Next".

Summary and sending of the experiment

On the last screen you will see a summary of everything you have configured for your A/B test. Once you have confirmed that everything is OK, click on "Send" to launch or schedule your mailings.

To check on the status of your mailings, go to "A/B Test" - "Manage experiments" in the main menu.

To create your A/B test by sender, as we have seen, you must first access the "A/B Test" option in the main menu and click on "Create new experiment". In the initial screen, you will have to write a name for the experiment (this is something internal that your subscribers will not see), select the list or segment and choose the option "Sender" in the "Type of experiment" section.

On the next screen, it will be time to define the sample with the scroll bar and configure the selection of the winner (whether it is manual or automatic). You can see the details in the introduction of this section.

A/B test configuration

Next, select the campaign you want to send by clicking on the image "Select campaign". A drop-down list with all the campaigns you have created will appear. Once located, click on "Select".

In the configuration wheel, you will be able to edit the subject of the message and the language of the unsubscribe and update forms.

Once your campaign is set up, it's time to define the sender of each combination as well as the response email you will use.

Sending date and time

In the next step you simply need to select whether you want to send the campaigns now or schedule them for later. Once you have defined this, click next.

Summary and sending of the experiment

On the last screen you will see a summary of everything you have configured in your A/B test. Once you have confirmed that everything is OK, click on "Send" to launch or schedule your mailings.

To check the status of your sendings, go to "Test A/B" - "Manage experiments" in the main menu.

To create your A/B test according to content, you must first go to the "A/B Test" option in the main menu and click on "Create new experiment". In the initial screen, you will have to write a name for the experiment (this is something internal that your subscribers will not see), select the list or segment and choose the "Content" option in the "Experiment type" section.

On the next screen it will be time to define the sample with the scroll bar and configure the choice of the winner (whether it is manual or automatic). You can see the details in the introduction of this section.

A/B test configuration

Next, select the two campaigns you want to send by clicking on the image "Select campaign". A drop-down list with all the campaigns you have created will appear. Once located, click on "Select". Please note that the system will not allow you to select the same campaign twice.

In the configuration wheel, you will be able to edit the sender you want to use, the language of the unsubscribe and update forms as well as the response email.

Once you have selected the two campaigns, you will have to define the same subject and preheader for both of them.

To move on to the next step, click "Next".

Sending date and time

In the next step, you simply need to select whether you want to send the campaigns now or schedule them for later. To go to the last step, click "Next".

Summary and sending of the experiment

On the last screen you will see a summary of everything you have set up for your A/B test. Once you have confirmed that everything is OK, click on "Send" to launch or schedule your sendings.

To check the status of your sendings, go to "Test A/B" - "Manage experiments" in the main menu.

To create your A/B test according to the sending date, you must first go to the "A/B Test" option in the main menu and click on "Create new experiment". In the initial screen, you will have to write a name for the experiment (this is something internal that your subscribers will not see), select the list or segment and choose the option "Send date" in the "Type of experiment" section.

A/B test configuration

Next, select the campaign you want to send by clicking on the image "Select campaign". A drop-down list with all the campaigns you have created will appear. Once located, click on "Select".

In the configuration wheel, you will be able to edit the subject, the sender, the language of the unsubscribe and update forms and the email address you will use for sending.

Sending date and time

In the next section you must define the date and time of sending of both combinations. Remember that in this type of test it is not possible to set the same date and time for both campaigns. Click on the "Next" button to access the last step.

Summary and sending of the experiment

On the last screen you will see a summary of everything you have set up for your A/B test. Once you have confirmed that everything is OK, click on "Send" to launch or schedule your sendings.

To check the status of your sendings, go to "A/B Test" - "Manage experiments" in the main menu.

In this section you can see the status of your A/B tests. These are the different states in which your experiment can be:

You can also check the statistics, pause or delete the test if it has not yet been executed and select the winner if you have chosen to do it manually.

If the sending has not yet started (i.e. the status of the experiment is "A/B sending queued), by going to "A/B Test" - "Manage experiments" in the options bar you can pause or delete the experiment.

If you access the experiment, you will also have the opportunity to pause or delete it.

Note that both actions affect the whole experiment, i.e. if you pause it, you will pause the sending of both combinations, and if you delete it, you will delete the whole experiment.

In Acrelia's A/B tests you can choose the winner yourself or configure it in such a way that, depending on the selected variable, the system chooses the winner and automatically sends it.

Manual selection of the winner

In the second step of the creation and configuration of your experiment, if you choose the "Manually" option in the "Winner selection" section, once the sending of the two combinations has finished, you will have to access "A/B Test" - "Manage A/B Test" and once inside, select the winning option (Winner A button or Winner B button). In order to make the right decision, you will have all the statistics of both sendings at your disposal.

Once you have selected the winning combination and sent the campaign, you will have the statistics of the winning campaign available in the same section.

Automatic" selection of the winner

In the second step of the creation and configuration of your experiment, if you choose the "Automatic" option in the "Winner selection" section, the system will select the winner according to the variable you have chosen (openings and clicks) once the sending of the combinations A and B has finished (and after the time you have previously configured).

Under "A/B Test" - "Experiment management", if you access the experiment, you will see that the system will mark the winning combination with a yellow box.

The data marked in yellow are those that had the combinations at the time of the choice. The data that are not highlighted are the data at the time of the query, i.e. in real time. These data are differentiated because combinations A and B can continue to receive openings and clicks after the winner has been selected.

The statistics you have for each mailing in your A/B tests are the same as those you have for your regular mailings. To access them, you must go to "A/B Test" - "Manage experiments" in the main menu and access the corresponding A/B test. You will see that within each experiment you will have the statistics of both combinations and the statistics of the winning mailing (once it has been launched).

For more information on the meaning of each of the statistical indicators that we show in the sent campaigns, visit this other article.

Before launching your experiment, there are several considerations you should take into account about how A/B testing works in Acrelia.

Please note that in case none of your recipients opens or clicks on your two combinations, the final group of recipients will receive the last combination by default (in case you select the automatic winner sending option). This could happen if you send your test to too few recipients.

On the other hand, if the same number of people click or open your combinations, the first combination will be sent to your remaining recipients (again, in case you have selected the winner's auto-send option).

All campaigns created with the Acrelia editor, have at the top a link to view the sending in the browser. If a contact to whom you have sent one of the combinations of the experiment opens the email in their browser, they will always see the test combination they received, even if the winning combination is different.

On the other hand, if you have your campaign history active, the winning combination will appear. However, if a subscriber views the web version of the campaign received, they will see their combination, not the winning combination.

As we discussed earlier in this article, in order to identify which elements make a campaign perform better, it is essential to experiment with a single variable. Since campaigns with dynamic content add other variations to the content and therefore more elements come into play, they are not suitable for use in A/B testing.

Book a free demo

Our experts will advise you on the best Email Marketing solution for your business.