Category: Features

Testing is the only way to answer the question that haunts every marketer when launching a campaign: will it work? But what if you could get data to help you choose the best option? That's what A/B testing is for, to experiment with various hypotheses and optimise the results of your email marketing campaigns.

The article includes the following sections:

An A/B testing is a way of comparing two campaigns (A and B) by sending them to two random groups of contacts on a list, before sending them to the other contacts on the better performing campaign. To prepare the test, it is necessary to create two variations of the messages, choose the volume of contacts on which you want to perform the test and the metric that will be used as a criterion to know which one has worked better.

Once the message has been sent, you have to wait a minimum amount of time to obtain the data obtained by A and B, compare them and thus decide which one has worked better in order to send the chosen variation to the rest of the contacts.

If when you are preparing your campaign, you have two subjects that seem good, several images and you don't know which one to choose or maybe the copy of a call to action makes you doubt, do an A/B test!

You can test any element of your email marketing related to your objective, for example:

These tests work with both simple and more complex variations: what if I send different templates? What if I reduce the length of the email? Will I get a better response if I add more personalised content? What if I adopt a different tone of voice than usual?

An A/B test can keep all the content exactly the same, but vary the sending time or the day of the week: do I get more openings at 8 a.m. or 3 p.m.? Do I get more conversions on Monday than on Thursday?

In addition, you can also test the concept of the campaign itself, i.e. the offer you are making. For example: is it better to give a 2 for 1 or a 30% discount? Does it work better to set a closing date for the campaign or to limit the stock?

Once you start using this type of experiment, you might be encouraged to always send your mailings this way. It's a good idea! This way you make data-driven decisions and make sure you get the best possible result for all your campaigns. Just keep in mind that some variations may take longer to prepare. However, there are many advantages to this type of testing.

An A/B test allows you to better understand their behaviour and fine-tune each message. The bigger the list, the more you need to offer relevant content to keep them interested for a longer period of time.

Sending everything to the entire list is just as bad a practice as not using statistical information to learn. If you find that a red button gets more clicks than an orange button, why would you want to keep using the one that gives you the worst data?

If openings and clicks increase, conversion will also increase. Taking advantage of what you know about potential customers allows you to convince them more easily to take the step and buy your products or services.

Changing what has been working for a long time may raise doubts, but if you do an A/B test and send the usual (A) and a more daring variant (B), you can see if it is worth the risk to try new or different ideas in your next campaigns.

In marketing, you can't stand still, you must always look for ways to improve. By making small changes every now and then, you can get your emails very well optimised for your subscribers.

Keep the following recommendations in mind to get the most out of your experiments.

For the data to be meaningful, you need to have a good number of subscribers for each variant. We recommend at least 5,000 contacts to get reliable data. The good thing is that the choice is made randomly, so you don't have to worry about who goes in which segment.

If you send different types of communications (newsletter, transactional messages, promotional campaigns), be clear about what you want to optimise. For example: if your goal is to sell more, make sure you optimise promotions first and then go for the rest.

To learn from an A/B test, you need to choose what you want to test and focus on one element in each mailing. For example: if I change the subject line, the day and the discount at the same time, how do I know which one is responsible for making the campaign more profitable?

For A/B testing to add value to your email marketing strategy, you need to approach it with a specific metric in mind:

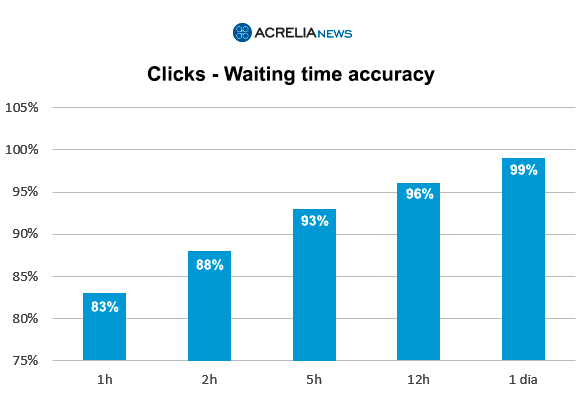

To allow time to collect data for the final send, it is best to allow at least a couple of hours to make the decision if you are looking at opens and at least an hour to make the decision if you are looking at clicks, although it is generally best to wait a day, especially if you are looking at conversion. You can do this manually or leave it scheduled so that the option with the highest winning metric is sent to the rest of the list.

Do not miss anything from our blog and join our Telegram https://t.me/acrelianews

Haven't you tried Acrelia News yet?

If you like this post, you will like much more our email marketing tool: professional, easy to use.